Playtika end-to-end tests recorder

By Andrey Vishnitskiy

As our project grew, we faced issues with each version’s regression. Regression takes all QAs on two days (40 human days, every 2 weeks). As the results indicate, the project is still growing and we require more and more manual QAs to enable successful regression. One of the ways to improve such a situation is to automate regression tests. For this purpose, we have begun to research existing end-to-end test frameworks.

Our initial tool requirements from the tool were that the tool runs on a physical device of any size (we support iOS, Android, Web), that it promotes test execution stability, that the costs for keeping tests up-to-date remain minimal, and that it could run without an internet connection (our game supports full offline performance).

Variants

Classic end-to-end test frameworks

First, we tried classic end-to-end test frameworks. We looked at Appium, AltUnity, and Playtika’s own framework.

They all work as a wrapper of Unity. That is, they export the scene hierarchy, name the buttons, and let you write the tests in other languages.

Disadvantages of this approach include:

- Sensitivity to object names and hierarchy in the scene, which can also lead to an increase in the time required to update existing tests, after changing the name of an object, or its position on the scene.

- Sensitivity to the button’s position (in some cases).

- Sensitivity to load time and transition time between game states. As a rule, delays between clicks are used, while load time for the same level – on different devices – may be different. This leads to unstable and sub-optimal test execution time.

- It is necessary to have additional software/servers.

- Offline support is not always available.

Classic code tests

The variant most suited to our needs is in-code tests. They are the same as in NUnit, but they work while the game is running.

In the code, we can take any button out of the code and simulate its being pressed. This solution’s main advantage is that it is not sensitive to the scene hierarchy; it is automatically updated when the code is refactored/modified.

The advantages of such a solution include:

1. Insensitivity to code modification and refactoring

2. Insensitivity to hierarchy and names of prefabs and objects on the scene

3. Insensitivity to the button’s positions

4. The ability to use ‘await’. This avoids depending on fixed delays when loading resources/scenes on different devices

5. When using the states, we can tell exactly which state the game is in and what the user sees

6. Layers do not appear in the form of exports

7. You can take any object or variable and assert anything

Nevertheless, the disadvantages include:

1. Needing to know C#

2. Needing to know architecture and names of available states, and variables, at minimum

Running tests

We tried running tests through Unity Test Runner. This package has the ability to run tests in builds, but to do so, it builds a separate build, each time. That is, tests can’t just be run on any build within the game; we need to build each project and its tests separately.

Building a build with Unity Test Runner is also a problem, as it builds all the tests at once, and they all require dependence on different Editor-only tools. Take NSubstitute, for example. It performs variations of tests on AssetDatabase, validation prefab, and mock-ups to working in Editor. None of these can be compiled into a build, and there’s no desire to support two versions of tests; one for UnityEditor and another for the runtime.

The easiest way to run such tests is to run a separate asynchronous method after the application has loaded.

Recording tests

An alternative approach to recording tests was found in Recorder tests. The main idea is to record the player’s input and then play it back. The primary advantage of this approach is its ability to simply create tests at a very fast speed. You can record a test in 5 minutes without any knowledge of programming languages. Just press the “Record” button and play the game and you will be presented with a finished test.

The disadvantages of this approach are more or less the same as those pertaining to classic end-to-end tests, with the addition of restrictions to its testing capabilities. Namely, logic constructions and checks cannot be used, apart from pushing (or not pushing) the button..

Playtika end-to-end test recording

Our approach combines both recording tests and writing tests in code. We take the best of both approaches and combine them into one.

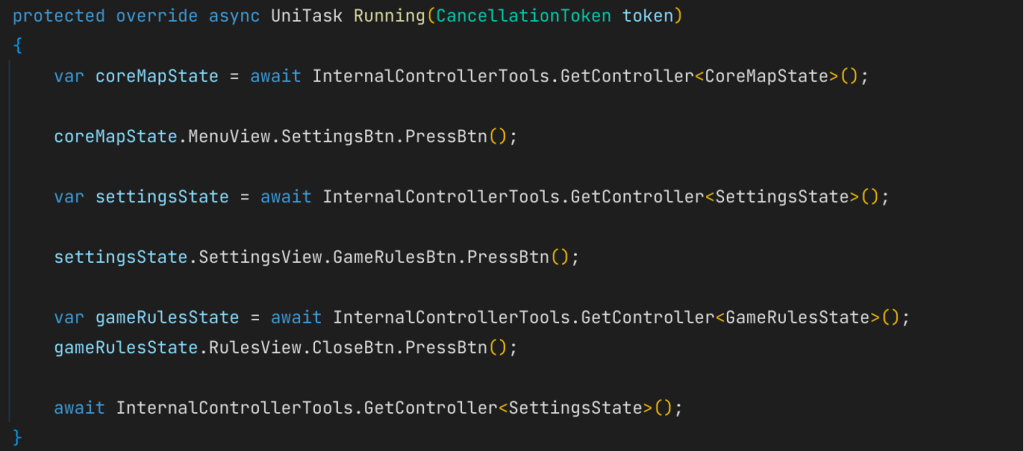

If you look again at how most of the tests look, you will easily find some regularities.

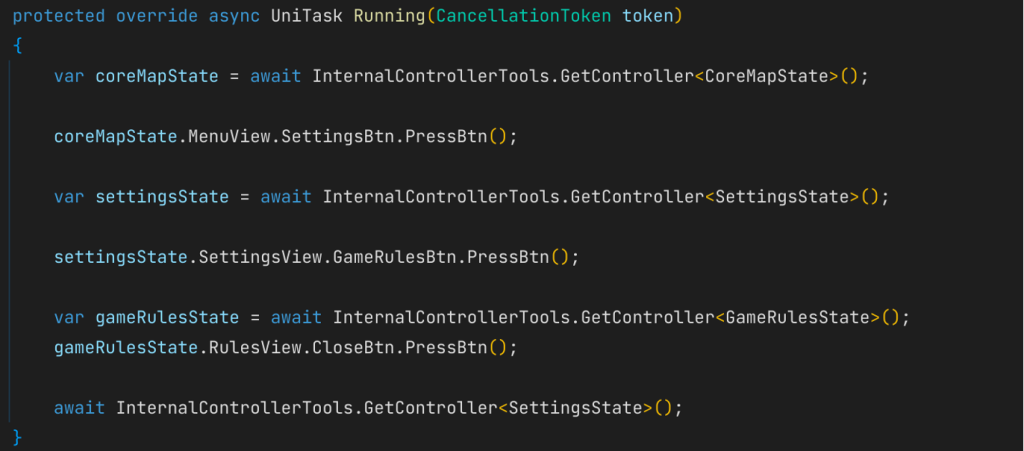

Each time, we take the type of the current state and its GameObject variable and simulate clicking on it.

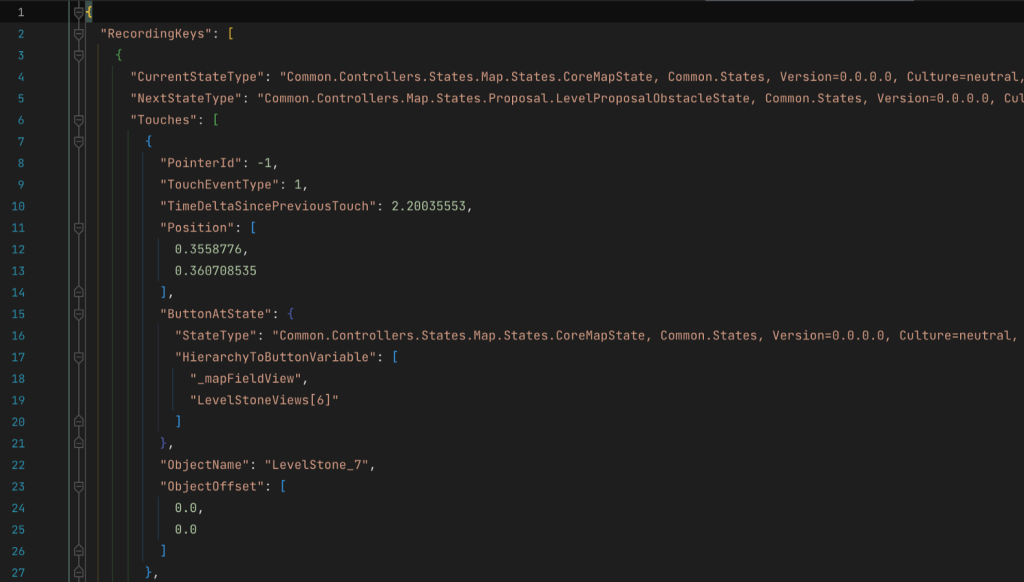

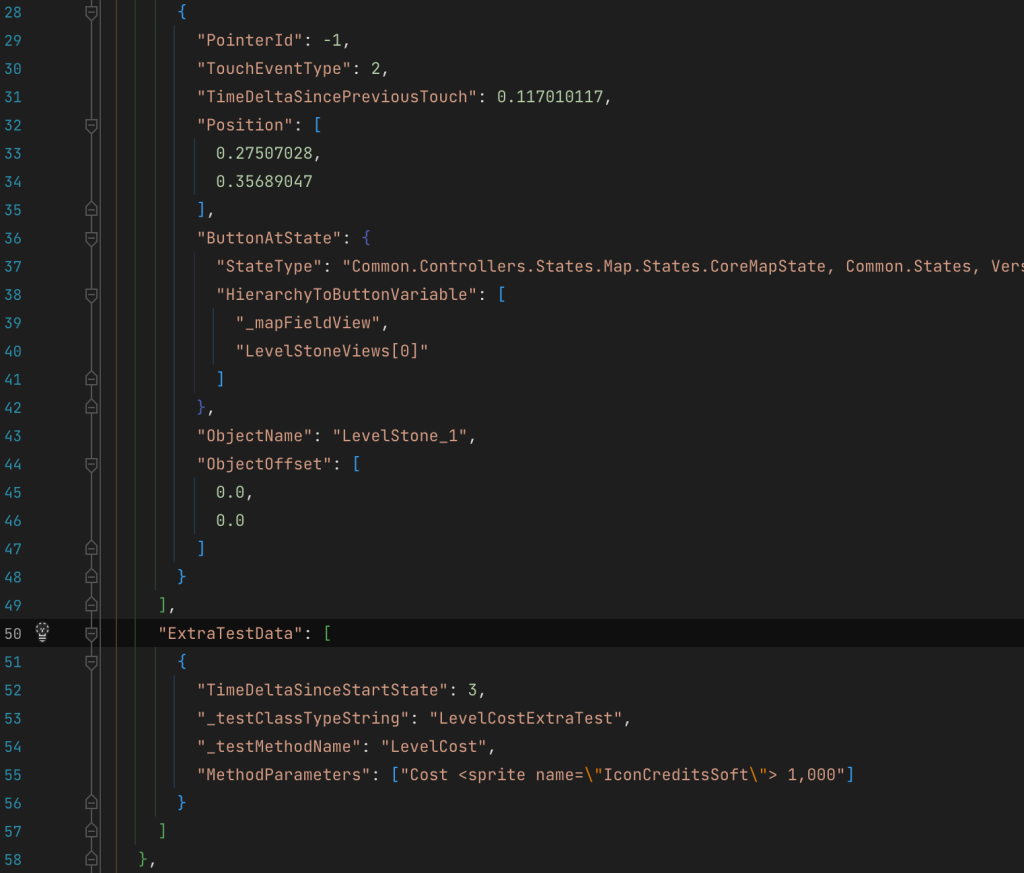

We can also serialize those parameters by the current state and the path to the GameObject variable.

Now, we can use Recorder to find the current game state, as well as the GameObject you clicked on. This way, we get the same tests in the code, but these can be recorded in the same two minutes! The added code stability ensures that there’s no sharing between scenes and no binding to hierarchy or screen, or platform size.

The test itself (validation) consists of determining the current state. That is if we press button N and expect to move to Y state, and we don’t move to Y state within 10 seconds, the test has failed. Also, if any Debug.LogError appears during the test, this is also a reason for the test to be marked as a failure.

To understand what was happening visually during the test and why it failed, the screen can be recorded. You can take screenshots of each click.

Example of creating an end-to-end test and playback on different aspect ratios:

Extra tests in Playtika Recorder

It should be mentioned that end-to-end tests also check the content of some visual elements. For this purpose, we have added extra tests. These are blocks of code that are inserted into the test record and then played back. As a rule, they check which credit meter shows how many stars the user receives, or how many cards they still have on the field. Links to these tests are embedded into the record file, in the format: run extra test N after clicking Y after X seconds.

Asynchronous tests and parameterization via strings are supported

The consistent environment of test execution

To ensure stability, the test must be run in the same environment and settings, every time. When it comes to end-to-end tests, this means the same approach time delta, the same number of enabled features, and the same game configs, for every test.

With respect to offline games, we mostly only need to swap out the player save functionality. However, in the case of online games, we need to configure the player settings for each test in the same way. This is all handled by internal automation tools.

On the client side, such motifs are filed directly into the player’s save. In cases involving encryption or storing saves in different files, we sub lay the saved file into an Application.persistentDataPath and overwrite the existing save, before starting the application.

To access Application.persistentDataPath from outside the app, you need to enable iOS FileSharing (UIFileSharingEnabled = TRUE in .plist). You can see this directory through the Finder.

Support existing tests

When using code tests, we rename/refactor them all at once. Now, we serialize our types and variables in a JSON record. We also run deserializing tests, to make sure no type/variable was changed in pull requests. If a change was made, we must “Replace all in path” from the previous type name to a new one. This ensures that there aren’t any broken tests in development when types or variables are renamed.

Only tests?

This framework allows us to create scenarios out of using our game. For example, we used it to automate loading time and performance tests.

Doing so saved up to 4 hours of manual QA on each loading time or performance measurement.

Summary of usage

We successfully use our recorder to write end-to-end tests and run them on devices, as well as to automate manual regression tests and routine operations, e.g. collection of performance metrics statistics.

As a result, we have now 150+ end-to-end tests, 35+% of the regression suite was automated, and tests have been run on preproduction Android builds on physical devices. We’ve also achieved great execution stability, just like with the code version of tests. Finally, our manual QA engineers continue to create end-to-end tests.

We plan to move up to 80% of our regressions to auto-tests.